20. Training and Testing with MNIST

By Bernd Klein. Last modified: 19 Apr 2024.

Using MNIST

The MNIST database (Modified National Institute of Standards and Technology database) of handwritten digits consists of a training set of 60,000 examples, and a test set of 10,000 examples. It is a subset of a larger set available from NIST. Additionally, the black and white images from NIST were size-normalized and centered to fit into a 28x28 pixel bounding box and anti-aliased, which introduced grayscale levels.

This database is well liked for training and testing in the field of machine learning and image processing. It is a remixed subset of the original NIST datasets. One half of the 60,000 training images consist of images from NIST's testing dataset and the other half from Nist's training set. The 10,000 images from the testing set are similarly assembled.

The MNIST dataset is used by researchers to test and compare their research results with others. The lowest error rates in literature are as low as 0.21 percent.1

Live Python training

See our Python training courses

Reading the MNIST data set

The images from the data set have the size 28 x 28. They are saved in the csv data files mnist_train.csv and mnist_test.csv.

Every line of these files consists of an image, i.e. 785 numbers between 0 and 255.

The first number of each line is the label, i.e. the digit which is depicted in the image. The following 784 numbers are the pixels of the 28 x 28 image.

import numpy as np

import matplotlib.pyplot as plt

image_size = 28 # width and length

no_of_different_labels = 10 # i.e. 0, 1, 2, 3, ..., 9

image_pixels = image_size * image_size

data_path = "../data/mnist/"

train_data = np.loadtxt(data_path + "mnist_train.csv",

delimiter=",")

test_data = np.loadtxt(data_path + "mnist_test.csv",

delimiter=",")

test_data[:10]

OUTPUT:

array([[7., 0., 0., ..., 0., 0., 0.],

[2., 0., 0., ..., 0., 0., 0.],

[1., 0., 0., ..., 0., 0., 0.],

...,

[9., 0., 0., ..., 0., 0., 0.],

[5., 0., 0., ..., 0., 0., 0.],

[9., 0., 0., ..., 0., 0., 0.]])

test_data[test_data==255]

test_data.shape

OUTPUT:

(10000, 785)

The images of the MNIST dataset are greyscale and the pixels range between 0 and 255 including both bounding values. We will map these values into an interval from [0.01, 1] by multiplying each pixel by 0.99 / 255 and adding 0.01 to the result. This way, we avoid 0 values as inputs, which are capable of preventing weight updates, as we we seen in the introductory chapter.

fac = 0.99 / 255

train_imgs = np.asfarray(train_data[:, 1:]) * fac + 0.01

test_imgs = np.asfarray(test_data[:, 1:]) * fac + 0.01

train_labels = np.asfarray(train_data[:, :1])

test_labels = np.asfarray(test_data[:, :1])

We need the labels in our calculations in a one-hot representation. We have 10 digits from 0 to 9, i.e. lr = np.arange(10).

Turning a label into one-hot representation can be achieved with the command: (lr==label).astype(np.int)

We demonstrate this in the following:

import numpy as np

lr = np.arange(10)

for label in range(10):

one_hot = (lr==label).astype(np.int8)

print("label: ", label, " in one-hot representation: ", one_hot)

OUTPUT:

label: 0 in one-hot representation: [1 0 0 0 0 0 0 0 0 0] label: 1 in one-hot representation: [0 1 0 0 0 0 0 0 0 0] label: 2 in one-hot representation: [0 0 1 0 0 0 0 0 0 0] label: 3 in one-hot representation: [0 0 0 1 0 0 0 0 0 0] label: 4 in one-hot representation: [0 0 0 0 1 0 0 0 0 0] label: 5 in one-hot representation: [0 0 0 0 0 1 0 0 0 0] label: 6 in one-hot representation: [0 0 0 0 0 0 1 0 0 0] label: 7 in one-hot representation: [0 0 0 0 0 0 0 1 0 0] label: 8 in one-hot representation: [0 0 0 0 0 0 0 0 1 0] label: 9 in one-hot representation: [0 0 0 0 0 0 0 0 0 1]

We are ready now to turn our labelled images into one-hot representations. Instead of zeroes and one, we create 0.01 and 0.99, which will be better for our calculations:

lr = np.arange(no_of_different_labels)

# transform labels into one hot representation

train_labels_one_hot = (lr==train_labels).astype(np.float64)

test_labels_one_hot = (lr==test_labels).astype(np.float64)

# we don't want zeroes and ones in the labels neither:

train_labels_one_hot[train_labels_one_hot==0] = 0.01

train_labels_one_hot[train_labels_one_hot==1] = 0.99

test_labels_one_hot[test_labels_one_hot==0] = 0.01

test_labels_one_hot[test_labels_one_hot==1] = 0.99

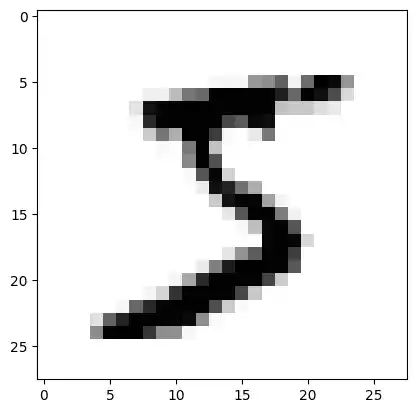

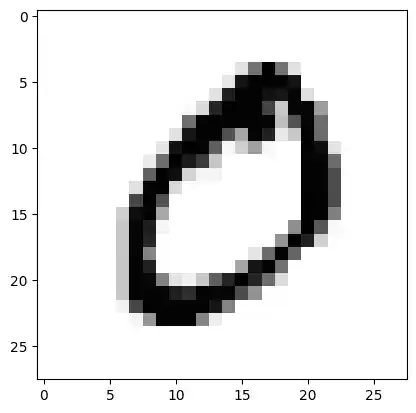

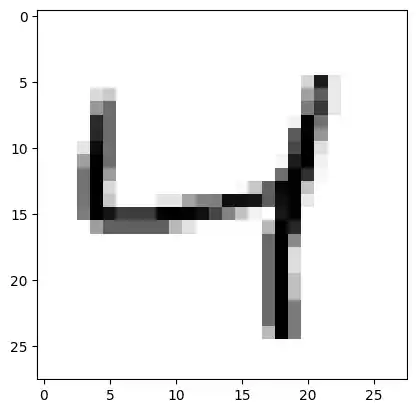

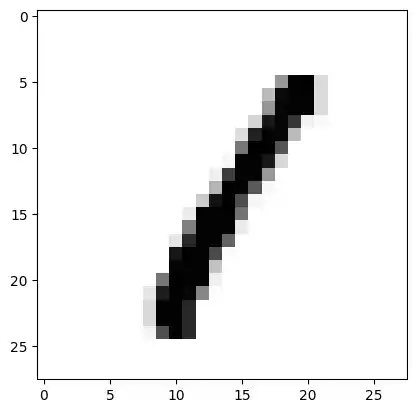

Before we start using the MNIST data sets with our neural network, we will have a look at some images:

for i in range(10):

img = train_imgs[i].reshape((28,28))

plt.imshow(img, cmap="Greys")

plt.show()

Dumping the Data for Faster Reload

You may have noticed that it is quite slow to read in the data from the csv files.

We will save the data in binary format with the dump function from the pickle module:

import pickle

with open("../data/mnist/pickled_mnist.pkl", "bw") as fh:

data = (train_imgs,

test_imgs,

train_labels,

test_labels)

pickle.dump(data, fh)

We are able now to read in the data by using pickle.load. This is a lot faster than using loadtxt on the csv files:

import pickle

with open("../data/mnist/pickled_mnist.pkl", "br") as fh:

data = pickle.load(fh)

train_imgs = data[0]

test_imgs = data[1]

train_labels = data[2]

test_labels = data[3]

train_labels_one_hot = (lr==train_labels).astype(np.float64)

test_labels_one_hot = (lr==test_labels).astype(np.float64)

image_size = 28 # width and length

no_of_different_labels = 10 # i.e. 0, 1, 2, 3, ..., 9

image_pixels = image_size * image_size

OUTPUT:

--------------------------------------------------------------------------- NameError Traceback (most recent call last) Cell In[1], line 11 8 train_labels = data[2] 9 test_labels = data[3] ---> 11 train_labels_one_hot = (lr==train_labels).astype(np.float64) 12 test_labels_one_hot = (lr==test_labels).astype(np.float64) 15 image_size = 28 # width and length NameError: name 'lr' is not defined

Classifying the Data

We will use the following neuronal network class for our first classification:

import numpy as np

@np.vectorize

def sigmoid(x):

return 1 / (1 + np.e ** -x)

activation_function = sigmoid

from scipy.stats import truncnorm

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm((low - mean) / sd,

(upp - mean) / sd,

loc=mean,

scale=sd)

class NeuralNetwork:

def __init__(self,

no_of_in_nodes,

no_of_out_nodes,

no_of_hidden_nodes,

learning_rate):

self.no_of_in_nodes = no_of_in_nodes

self.no_of_out_nodes = no_of_out_nodes

self.no_of_hidden_nodes = no_of_hidden_nodes

self.learning_rate = learning_rate

self.create_weight_matrices()

def create_weight_matrices(self):

"""

A method to initialize the weight

matrices of the neural network

"""

rad = 1 / np.sqrt(self.no_of_in_nodes)

X = truncated_normal(mean=0,

sd=1,

low=-rad,

upp=rad)

self.wih = X.rvs((self.no_of_hidden_nodes,

self.no_of_in_nodes))

rad = 1 / np.sqrt(self.no_of_hidden_nodes)

X = truncated_normal(mean=0, sd=1, low=-rad, upp=rad)

self.who = X.rvs((self.no_of_out_nodes,

self.no_of_hidden_nodes))

def train(self, input_vector, target_vector):

"""

input_vector and target_vector can

be tuple, list or ndarray

"""

input_vector = np.array(input_vector, ndmin=2).T

target_vector = np.array(target_vector, ndmin=2).T

output_vector1 = np.dot(self.wih,

input_vector)

output_hidden = activation_function(output_vector1)

output_vector2 = np.dot(self.who,

output_hidden)

output_network = activation_function(output_vector2)

output_errors = target_vector - output_network

# update the weights:

tmp = output_errors * output_network \

* (1.0 - output_network)

tmp = self.learning_rate * np.dot(tmp,

output_hidden.T)

self.who += tmp

# calculate hidden errors:

hidden_errors = np.dot(self.who.T,

output_errors)

# update the weights:

tmp = hidden_errors * output_hidden * \

(1.0 - output_hidden)

self.wih += self.learning_rate \

* np.dot(tmp, input_vector.T)

def run(self, input_vector):

# input_vector can be tuple, list or ndarray

input_vector = np.array(input_vector, ndmin=2).T

output_vector = np.dot(self.wih,

input_vector)

output_vector = activation_function(output_vector)

output_vector = np.dot(self.who,

output_vector)

output_vector = activation_function(output_vector)

return output_vector

def confusion_matrix(self, data_array, labels):

cm = np.zeros((10, 10), int)

for i in range(len(data_array)):

res = self.run(data_array[i])

res_max = res.argmax()

target = labels[i][0]

cm[res_max, int(target)] += 1

return cm

def precision(self, label, confusion_matrix):

col = confusion_matrix[:, label]

return confusion_matrix[label, label] / col.sum()

def recall(self, label, confusion_matrix):

row = confusion_matrix[label, :]

return confusion_matrix[label, label] / row.sum()

def evaluate(self, data, labels):

corrects, wrongs = 0, 0

for i in range(len(data)):

res = self.run(data[i])

res_max = res.argmax()

if res_max == labels[i]:

corrects += 1

else:

wrongs += 1

return corrects, wrongs

ANN = NeuralNetwork(no_of_in_nodes = image_pixels,

no_of_out_nodes = 10,

no_of_hidden_nodes = 100,

learning_rate = 0.1)

for i in range(len(train_imgs)):

ANN.train(train_imgs[i], train_labels_one_hot[i])

for i in range(20):

res = ANN.run(test_imgs[i])

print(test_labels[i], np.argmax(res), np.max(res))

OUTPUT:

[7.] 7 0.9979028412988024 [2.] 2 0.9461552524083486 [1.] 1 0.9988473463714598 [0.] 0 0.9868767441455373 [4.] 4 0.9904712994835662 [1.] 1 0.9989044149999307 [4.] 4 0.9474458721172182 [9.] 9 0.9971323526661966 [5.] 6 0.1726742248261814 [9.] 9 0.9844324555497621 [0.] 0 0.9861460541274183 [6.] 6 0.9466668750512497 [9.] 9 0.9967175442346061 [0.] 0 0.9946260472845094 [1.] 1 0.9986347590497112 [5.] 5 0.8653309153038081 [9.] 9 0.9954821448307862 [7.] 7 0.9953739798564898 [3.] 3 0.9262009482992026 [4.] 4 0.9976241749651665

corrects, wrongs = ANN.evaluate(train_imgs, train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = ANN.evaluate(test_imgs, test_labels)

print("accuracy: test", corrects / ( corrects + wrongs))

cm = ANN.confusion_matrix(train_imgs, train_labels)

print(cm)

for i in range(10):

print("digit: ", i, "precision: ", ANN.precision(i, cm), "recall: ", ANN.recall(i, cm))

OUTPUT:

accuracy train: 0.94715 accuracy: test 0.9457 [[5809 0 54 22 9 44 48 21 21 28] [ 1 6630 60 25 14 33 22 54 119 12] [ 1 20 5489 45 12 9 4 57 14 4] [ 6 40 118 5836 3 133 1 78 162 78] [ 11 14 64 6 5501 41 8 47 32 83] [ 9 5 5 57 2 4960 27 2 24 10] [ 35 2 48 18 46 67 5786 3 35 3] [ 1 5 31 35 8 3 0 5808 4 34] [ 35 11 76 43 9 52 22 18 5332 19] [ 15 15 13 44 238 79 0 177 108 5678]] digit: 0 precision: 0.9807529967921661 recall: 0.959214002642008 digit: 1 precision: 0.9833877187778107 recall: 0.9512195121951219 digit: 2 precision: 0.9212823094998321 recall: 0.9706454465075155 digit: 3 precision: 0.9518838688631545 recall: 0.9041053446940356 digit: 4 precision: 0.9416295789113317 recall: 0.9473049767521956 digit: 5 precision: 0.914960339420771 recall: 0.9723583611056655 digit: 6 precision: 0.9776951672862454 recall: 0.957471454575542 digit: 7 precision: 0.9270550678371907 recall: 0.9795918367346939 digit: 8 precision: 0.9112972141514271 recall: 0.9492611714438313 digit: 9 precision: 0.9544461253992268 recall: 0.8917857703785143

Multiple Runs

We can repeat the training multiple times. Each run is called an "epoch".

epochs = 10

NN = NeuralNetwork(no_of_in_nodes = image_pixels,

no_of_out_nodes = 10,

no_of_hidden_nodes = 100,

learning_rate = 0.1)

for epoch in range(epochs):

print("epoch: ", epoch)

for i in range(len(train_imgs)):

NN.train(train_imgs[i],

train_labels_one_hot[i])

corrects, wrongs = NN.evaluate(train_imgs, train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = NN.evaluate(test_imgs, test_labels)

print("accuracy: test", corrects / ( corrects + wrongs))

OUTPUT:

epoch: 0 accuracy train: 0.9428333333333333 accuracy: test 0.9412 epoch: 1 accuracy train: 0.9622 accuracy: test 0.9561 epoch: 2 accuracy train: 0.9696833333333333 accuracy: test 0.962 epoch: 3 accuracy train: 0.9739 accuracy: test 0.9644 epoch: 4 accuracy train: 0.97665 accuracy: test 0.9655 epoch: 5 accuracy train: 0.9795166666666667 accuracy: test 0.9677 epoch: 6 accuracy train: 0.9812833333333333 accuracy: test 0.9684 epoch: 7 accuracy train: 0.9816166666666667 accuracy: test 0.9681 epoch: 8 accuracy train: 0.98425 accuracy: test 0.9712 epoch: 9 accuracy train: 0.9848833333333333 accuracy: test 0.9701

We want to do the multiple training of the training set inside of our network. To this purpose we rewrite the method train and add a method train_single. train_single is more or less what we called 'train' before. Whereas the new 'train' method is doing the epoch counting. For testing purposes, we save the weight matrices after each epoch in

the list intermediate_weights. This list is returned as the output of train:

import numpy as np

@np.vectorize

def sigmoid(x):

return 1 / (1 + np.e ** -x)

activation_function = sigmoid

from scipy.stats import truncnorm

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm((low - mean) / sd,

(upp - mean) / sd,

loc=mean,

scale=sd)

class NeuralNetwork:

def __init__(self,

no_of_in_nodes,

no_of_out_nodes,

no_of_hidden_nodes,

learning_rate):

self.no_of_in_nodes = no_of_in_nodes

self.no_of_out_nodes = no_of_out_nodes

self.no_of_hidden_nodes = no_of_hidden_nodes

self.learning_rate = learning_rate

self.create_weight_matrices()

def create_weight_matrices(self):

""" A method to initialize the weight matrices of the neural network"""

rad = 1 / np.sqrt(self.no_of_in_nodes)

X = truncated_normal(mean=0,

sd=1,

low=-rad,

upp=rad)

self.wih = X.rvs((self.no_of_hidden_nodes,

self.no_of_in_nodes))

rad = 1 / np.sqrt(self.no_of_hidden_nodes)

X = truncated_normal(mean=0,

sd=1,

low=-rad,

upp=rad)

self.who = X.rvs((self.no_of_out_nodes,

self.no_of_hidden_nodes))

def train_single(self, input_vector, target_vector):

"""

input_vector and target_vector can be tuple,

list or ndarray

"""

output_vectors = []

input_vector = np.array(input_vector, ndmin=2).T

target_vector = np.array(target_vector, ndmin=2).T

output_vector1 = np.dot(self.wih,

input_vector)

output_hidden = activation_function(output_vector1)

output_vector2 = np.dot(self.who,

output_hidden)

output_network = activation_function(output_vector2)

output_errors = target_vector - output_network

# update the weights:

tmp = output_errors * output_network * \

(1.0 - output_network)

tmp = self.learning_rate * np.dot(tmp,

output_hidden.T)

self.who += tmp

# calculate hidden errors:

hidden_errors = np.dot(self.who.T,

output_errors)

# update the weights:

tmp = hidden_errors * output_hidden * (1.0 - output_hidden)

self.wih += self.learning_rate * np.dot(tmp, input_vector.T)

def train(self, data_array,

labels_one_hot_array,

epochs=1,

intermediate_results=False):

intermediate_weights = []

for epoch in range(epochs):

print("*", end="")

for i in range(len(data_array)):

self.train_single(data_array[i],

labels_one_hot_array[i])

if intermediate_results:

intermediate_weights.append((self.wih.copy(),

self.who.copy()))

return intermediate_weights

def confusion_matrix(self, data_array, labels):

cm = {}

for i in range(len(data_array)):

res = self.run(data_array[i])

res_max = res.argmax()

target = labels[i][0]

if (target, res_max) in cm:

cm[(target, res_max)] += 1

else:

cm[(target, res_max)] = 1

return cm

def run(self, input_vector):

""" input_vector can be tuple, list or ndarray """

input_vector = np.array(input_vector, ndmin=2).T

output_vector = np.dot(self.wih,

input_vector)

output_vector = activation_function(output_vector)

output_vector = np.dot(self.who,

output_vector)

output_vector = activation_function(output_vector)

return output_vector

def evaluate(self, data, labels):

corrects, wrongs = 0, 0

for i in range(len(data)):

res = self.run(data[i])

res_max = res.argmax()

if res_max == labels[i]:

corrects += 1

else:

wrongs += 1

return corrects, wrongs

epochs = 10

ANN = NeuralNetwork(no_of_in_nodes = image_pixels,

no_of_out_nodes = 10,

no_of_hidden_nodes = 100,

learning_rate = 0.15)

weights = ANN.train(train_imgs,

train_labels_one_hot,

epochs=epochs,

intermediate_results=True)

OUTPUT:

**********

cm = ANN.confusion_matrix(train_imgs, train_labels)

print(ANN.run(train_imgs[i]))

OUTPUT:

[[1.28087077e-03] [1.07254869e-09] [5.30910305e-05] [1.34352894e-07] [1.99509208e-08] [5.30616407e-04] [4.37127717e-08] [2.69301526e-05] [9.98653378e-01] [5.06814170e-04]]

cm = list(cm.items())

print(sorted(cm))

OUTPUT:

[((0.0, 0), 5894), ((0.0, 2), 3), ((0.0, 3), 1), ((0.0, 4), 4), ((0.0, 5), 5), ((0.0, 6), 2), ((0.0, 8), 6), ((0.0, 9), 8), ((1.0, 0), 1), ((1.0, 1), 6706), ((1.0, 2), 10), ((1.0, 3), 1), ((1.0, 4), 5), ((1.0, 5), 2), ((1.0, 7), 3), ((1.0, 8), 8), ((1.0, 9), 6), ((2.0, 0), 13), ((2.0, 1), 14), ((2.0, 2), 5873), ((2.0, 3), 13), ((2.0, 4), 5), ((2.0, 5), 6), ((2.0, 6), 2), ((2.0, 7), 13), ((2.0, 8), 12), ((2.0, 9), 7), ((3.0, 0), 6), ((3.0, 1), 9), ((3.0, 2), 15), ((3.0, 3), 5994), ((3.0, 4), 1), ((3.0, 5), 51), ((3.0, 6), 3), ((3.0, 7), 12), ((3.0, 8), 16), ((3.0, 9), 24), ((4.0, 0), 8), ((4.0, 1), 3), ((4.0, 2), 7), ((4.0, 4), 5699), ((4.0, 5), 2), ((4.0, 6), 11), ((4.0, 7), 1), ((4.0, 8), 8), ((4.0, 9), 103), ((5.0, 0), 9), ((5.0, 1), 2), ((5.0, 2), 2), ((5.0, 3), 15), ((5.0, 4), 1), ((5.0, 5), 5369), ((5.0, 6), 7), ((5.0, 7), 1), ((5.0, 8), 4), ((5.0, 9), 11), ((6.0, 0), 37), ((6.0, 1), 5), ((6.0, 4), 4), ((6.0, 5), 45), ((6.0, 6), 5815), ((6.0, 8), 11), ((6.0, 9), 1), ((7.0, 0), 6), ((7.0, 1), 15), ((7.0, 2), 25), ((7.0, 3), 5), ((7.0, 4), 9), ((7.0, 5), 2), ((7.0, 7), 6143), ((7.0, 8), 5), ((7.0, 9), 55), ((8.0, 0), 10), ((8.0, 1), 40), ((8.0, 2), 4), ((8.0, 3), 31), ((8.0, 4), 4), ((8.0, 5), 34), ((8.0, 6), 14), ((8.0, 7), 3), ((8.0, 8), 5680), ((8.0, 9), 31), ((9.0, 0), 11), ((9.0, 1), 2), ((9.0, 2), 1), ((9.0, 3), 8), ((9.0, 4), 11), ((9.0, 5), 12), ((9.0, 6), 1), ((9.0, 7), 8), ((9.0, 8), 7), ((9.0, 9), 5888)]

for i in range(epochs):

print("epoch: ", i)

ANN.wih = weights[i][0]

ANN.who = weights[i][1]

corrects, wrongs = ANN.evaluate(train_imgs, train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = ANN.evaluate(test_imgs, test_labels)

print("accuracy: test", corrects / ( corrects + wrongs))

OUTPUT:

epoch: 0 accuracy train: 0.9444333333333333 accuracy: test 0.944 epoch: 1 accuracy train: 0.9602666666666667 accuracy: test 0.9565 epoch: 2 accuracy train: 0.9657833333333333 accuracy: test 0.9587 epoch: 3 accuracy train: 0.9704833333333334 accuracy: test 0.9625 epoch: 4 accuracy train: 0.9738333333333333 accuracy: test 0.9634 epoch: 5 accuracy train: 0.9789 accuracy: test 0.967 epoch: 6 accuracy train: 0.9785333333333334 accuracy: test 0.9655 epoch: 7 accuracy train: 0.9814833333333334 accuracy: test 0.9682 epoch: 8 accuracy train: 0.9833666666666666 accuracy: test 0.9709 epoch: 9 accuracy train: 0.98435 accuracy: test 0.9689

Live Python training

See our Python training courses

With Bias Nodes

import numpy as np

@np.vectorize

def sigmoid(x):

return 1 / (1 + np.e ** -x)

activation_function = sigmoid

from scipy.stats import truncnorm

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm((low - mean) / sd,

(upp - mean) / sd,

loc=mean,

scale=sd)

class NeuralNetwork:

def __init__(self,

no_of_in_nodes,

no_of_out_nodes,

no_of_hidden_nodes,

learning_rate,

bias=None

):

self.no_of_in_nodes = no_of_in_nodes

self.no_of_out_nodes = no_of_out_nodes

self.no_of_hidden_nodes = no_of_hidden_nodes

self.learning_rate = learning_rate

self.bias = bias

self.create_weight_matrices()

def create_weight_matrices(self):

"""

A method to initialize the weight

matrices of the neural network with

optional bias nodes

"""

bias_node = 1 if self.bias else 0

rad = 1 / np.sqrt(self.no_of_in_nodes + bias_node)

X = truncated_normal(mean=0,

sd=1,

low=-rad,

upp=rad)

self.wih = X.rvs((self.no_of_hidden_nodes,

self.no_of_in_nodes + bias_node))

rad = 1 / np.sqrt(self.no_of_hidden_nodes + bias_node)

X = truncated_normal(mean=0, sd=1, low=-rad, upp=rad)

self.who = X.rvs((self.no_of_out_nodes,

self.no_of_hidden_nodes + bias_node))

def train(self, input_vector, target_vector):

"""

input_vector and target_vector can

be tuple, list or ndarray

"""

bias_node = 1 if self.bias else 0

if self.bias:

# adding bias node to the end of the inpuy_vector

input_vector = np.concatenate((input_vector,

[self.bias]) )

input_vector = np.array(input_vector, ndmin=2).T

target_vector = np.array(target_vector, ndmin=2).T

output_vector1 = np.dot(self.wih,

input_vector)

output_hidden = activation_function(output_vector1)

if self.bias:

output_hidden = np.concatenate((output_hidden,

[[self.bias]]) )

output_vector2 = np.dot(self.who,

output_hidden)

output_network = activation_function(output_vector2)

output_errors = target_vector - output_network

# update the weights:

tmp = output_errors * output_network * (1.0 - output_network)

tmp = self.learning_rate * np.dot(tmp, output_hidden.T)

self.who += tmp

# calculate hidden errors:

hidden_errors = np.dot(self.who.T,

output_errors)

# update the weights:

tmp = hidden_errors * output_hidden * (1.0 - output_hidden)

if self.bias:

x = np.dot(tmp, input_vector.T)[:-1,:]

else:

x = np.dot(tmp, input_vector.T)

self.wih += self.learning_rate * x

def run(self, input_vector):

"""

input_vector can be tuple, list or ndarray

"""

if self.bias:

# adding bias node to the end of the inpuy_vector

input_vector = np.concatenate((input_vector, [1]) )

input_vector = np.array(input_vector, ndmin=2).T

output_vector = np.dot(self.wih,

input_vector)

output_vector = activation_function(output_vector)

if self.bias:

output_vector = np.concatenate( (output_vector,

[[1]]) )

output_vector = np.dot(self.who,

output_vector)

output_vector = activation_function(output_vector)

return output_vector

def evaluate(self, data, labels):

corrects, wrongs = 0, 0

for i in range(len(data)):

res = self.run(data[i])

res_max = res.argmax()

if res_max == labels[i]:

corrects += 1

else:

wrongs += 1

return corrects, wrongs

ANN = NeuralNetwork(no_of_in_nodes=image_pixels,

no_of_out_nodes=10,

no_of_hidden_nodes=200,

learning_rate=0.1,

bias=None)

for i in range(len(train_imgs)):

ANN.train(train_imgs[i], train_labels_one_hot[i])

for i in range(20):

res = ANN.run(test_imgs[i])

print(test_labels[i], np.argmax(res), np.max(res))

OUTPUT:

[7.] 7 0.9995785883736796 [2.] 2 0.9708204747960228 [1.] 1 0.9997132666068997 [0.] 0 0.9971228311924519 [4.] 4 0.9690009530639812 [1.] 1 0.9996170089997094 [4.] 4 0.9968400335887104 [9.] 9 0.9977498009684621 [5.] 6 0.11101686469489755 [9.] 9 0.9986404217328356 [0.] 0 0.9857022340290854 [6.] 6 0.830599159951893 [9.] 9 0.9996500566788566 [0.] 0 0.9988058217015645 [1.] 1 0.9996801544197353 [5.] 5 0.9130121668840985 [9.] 9 0.9989904329945136 [7.] 7 0.9979784265219221 [3.] 3 0.8336386866206579 [4.] 4 0.9993982252972375

corrects, wrongs = ANN.evaluate(train_imgs, train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = ANN.evaluate(test_imgs, test_labels)

print("accuracy: test", corrects / ( corrects + wrongs))

OUTPUT:

accuracy train: 0.9487666666666666 accuracy: test 0.9504

Version with Bias and Epochs:

import numpy as np

@np.vectorize

def sigmoid(x):

return 1 / (1 + np.e ** -x)

activation_function = sigmoid

from scipy.stats import truncnorm

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm((low - mean) / sd,

(upp - mean) / sd,

loc=mean,

scale=sd)

class NeuralNetwork:

def __init__(self,

no_of_in_nodes,

no_of_out_nodes,

no_of_hidden_nodes,

learning_rate,

bias=None

):

self.no_of_in_nodes = no_of_in_nodes

self.no_of_out_nodes = no_of_out_nodes

self.no_of_hidden_nodes = no_of_hidden_nodes

self.learning_rate = learning_rate

self.bias = bias

self.create_weight_matrices()

def create_weight_matrices(self):

"""

A method to initialize the weight matrices

of the neural network with optional

bias nodes"""

bias_node = 1 if self.bias else 0

rad = 1 / np.sqrt(self.no_of_in_nodes + bias_node)

X = truncated_normal(mean=0, sd=1, low=-rad, upp=rad)

self.wih = X.rvs((self.no_of_hidden_nodes,

self.no_of_in_nodes + bias_node))

rad = 1 / np.sqrt(self.no_of_hidden_nodes + bias_node)

X = truncated_normal(mean=0,

sd=1,

low=-rad,

upp=rad)

self.who = X.rvs((self.no_of_out_nodes,

self.no_of_hidden_nodes + bias_node))

def train_single(self, input_vector, target_vector):

"""

input_vector and target_vector can be tuple,

list or ndarray

"""

bias_node = 1 if self.bias else 0

if self.bias:

# adding bias node to the end of the inpuy_vector

input_vector = np.concatenate( (input_vector,

[self.bias]) )

output_vectors = []

input_vector = np.array(input_vector, ndmin=2).T

target_vector = np.array(target_vector, ndmin=2).T

output_vector1 = np.dot(self.wih,

input_vector)

output_hidden = activation_function(output_vector1)

if self.bias:

output_hidden = np.concatenate((output_hidden,

[[self.bias]]) )

output_vector2 = np.dot(self.who,

output_hidden)

output_network = activation_function(output_vector2)

output_errors = target_vector - output_network

# update the weights:

tmp = output_errors * output_network * (1.0 - output_network)

tmp = self.learning_rate * np.dot(tmp,

output_hidden.T)

self.who += tmp

# calculate hidden errors:

hidden_errors = np.dot(self.who.T,

output_errors)

# update the weights:

tmp = hidden_errors * output_hidden * (1.0 - output_hidden)

if self.bias:

x = np.dot(tmp, input_vector.T)[:-1,:]

else:

x = np.dot(tmp, input_vector.T)

self.wih += self.learning_rate * x

def train(self, data_array,

labels_one_hot_array,

epochs=1,

intermediate_results=False):

intermediate_weights = []

for epoch in range(epochs):

for i in range(len(data_array)):

self.train_single(data_array[i],

labels_one_hot_array[i])

if intermediate_results:

intermediate_weights.append((self.wih.copy(),

self.who.copy()))

return intermediate_weights

def run(self, input_vector):

# input_vector can be tuple, list or ndarray

if self.bias:

# adding bias node to the end of the inpuy_vector

input_vector = np.concatenate( (input_vector,

[self.bias]) )

input_vector = np.array(input_vector, ndmin=2).T

output_vector = np.dot(self.wih,

input_vector)

output_vector = activation_function(output_vector)

if self.bias:

output_vector = np.concatenate( (output_vector,

[[self.bias]]) )

output_vector = np.dot(self.who,

output_vector)

output_vector = activation_function(output_vector)

return output_vector

def evaluate(self, data, labels):

corrects, wrongs = 0, 0

for i in range(len(data)):

res = self.run(data[i])

res_max = res.argmax()

if res_max == labels[i]:

corrects += 1

else:

wrongs += 1

return corrects, wrongs

epochs = 12

network = NeuralNetwork(no_of_in_nodes=image_pixels,

no_of_out_nodes=10,

no_of_hidden_nodes=100,

learning_rate=0.1,

bias=None)

weights = network.train(train_imgs,

train_labels_one_hot,

epochs=epochs,

intermediate_results=True)

for epoch in range(epochs):

print("epoch: ", epoch)

network.wih = weights[epoch][0]

network.who = weights[epoch][1]

corrects, wrongs = network.evaluate(train_imgs,

train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = network.evaluate(test_imgs,

test_labels)

print("accuracy test: ", corrects / ( corrects + wrongs))

OUTPUT:

epoch: 0 accuracy train: 0.94595 accuracy test: 0.9455 epoch: 1 accuracy train: 0.9622 accuracy test: 0.9574 epoch: 2 accuracy train: 0.96945 accuracy test: 0.9615 epoch: 3 accuracy train: 0.9747666666666667 accuracy test: 0.9643 epoch: 4 accuracy train: 0.97855 accuracy test: 0.9662 epoch: 5 accuracy train: 0.9800666666666666 accuracy test: 0.9663 epoch: 6 accuracy train: 0.9814166666666667 accuracy test: 0.967 epoch: 7 accuracy train: 0.9828333333333333 accuracy test: 0.9671 epoch: 8 accuracy train: 0.9847 accuracy test: 0.9697 epoch: 9 accuracy train: 0.9858833333333333 accuracy test: 0.9703 epoch: 10 accuracy train: 0.9867 accuracy test: 0.9702 epoch: 11 accuracy train: 0.9880666666666666 accuracy test: 0.9702

epochs = 12

with open("nist_tests.csv", "w") as fh_out:

for hidden_nodes in [20, 50, 100, 150]:

for learning_rate in [0.05, 0.1, 0.2]:

for bias in [None, 0.5]:

network = NeuralNetwork(no_of_in_nodes=image_pixels,

no_of_out_nodes=10,

no_of_hidden_nodes=hidden_nodes,

learning_rate=learning_rate,

bias=bias)

weights = network.train(train_imgs,

train_labels_one_hot,

epochs=epochs,

intermediate_results=True)

for epoch in range(epochs):

print("*", end="")

network.wih = weights[epoch][0]

network.who = weights[epoch][1]

train_corrects, train_wrongs = network.evaluate(train_imgs,

train_labels)

test_corrects, test_wrongs = network.evaluate(test_imgs,

test_labels)

outstr = str(hidden_nodes) + " " + str(learning_rate) + " " + str(bias)

outstr += " " + str(epoch) + " "

outstr += str(train_corrects / (train_corrects + train_wrongs)) + " "

outstr += str(train_wrongs / (train_corrects + train_wrongs)) + " "

outstr += str(test_corrects / (test_corrects + test_wrongs)) + " "

outstr += str(test_wrongs / (test_corrects + test_wrongs))

fh_out.write(outstr + "\n" )

fh_out.flush()

OUTPUT:

************************************************************************************************************************************************************************************************************************************************************************************************

The file nist_tests_20_50_100_120_150.csv contains the results from a run of the previous program.

Live Python training

See our Python training courses

Networks with multiple hidden layers

We will write a new neural network class, in which we can define an arbitrary number of hidden layers. The code is also improved, because the weight matrices are now build inside of a loop instead redundant code:

import numpy as np

from scipy.special import expit as activation_function

from scipy.stats import truncnorm

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm((low - mean) / sd,

(upp - mean) / sd,

loc=mean,

scale=sd)

class NeuralNetwork:

def __init__(self,

network_structure, # ie. [input_nodes, hidden1_nodes, ... , hidden_n_nodes, output_nodes]

learning_rate,

bias=None

):

self.structure = network_structure

self.learning_rate = learning_rate

self.bias = bias

self.create_weight_matrices()

def create_weight_matrices(self):

bias_node = 1 if self.bias else 0

self.weights_matrices = []

layer_index = 1

no_of_layers = len(self.structure)

while layer_index < no_of_layers:

nodes_in = self.structure[layer_index-1]

nodes_out = self.structure[layer_index]

n = (nodes_in + bias_node) * nodes_out

rad = 1 / np.sqrt(nodes_in)

X = truncated_normal(mean=2,

sd=1,

low=-rad,

upp=rad)

wm = X.rvs(n).reshape((nodes_out, nodes_in + bias_node))

self.weights_matrices.append(wm)

layer_index += 1

def train(self, input_vector, target_vector):

"""

input_vector and target_vector can be tuple,

list or ndarray

"""

no_of_layers = len(self.structure)

input_vector = np.array(input_vector, ndmin=2).T

layer_index = 0

# The output/input vectors of the various layers:

res_vectors = [input_vector]

while layer_index < no_of_layers - 1:

in_vector = res_vectors[-1]

if self.bias:

# adding bias node to the end of the 'input'_vector

in_vector = np.concatenate( (in_vector,

[[self.bias]]) )

res_vectors[-1] = in_vector

x = np.dot(self.weights_matrices[layer_index],

in_vector)

out_vector = activation_function(x)

# the output of one layer is the input of the next one:

res_vectors.append(out_vector)

layer_index += 1

layer_index = no_of_layers - 1

target_vector = np.array(target_vector, ndmin=2).T

# The input vectors to the various layers

output_errors = target_vector - out_vector

while layer_index > 0:

out_vector = res_vectors[layer_index]

in_vector = res_vectors[layer_index-1]

if self.bias and not layer_index==(no_of_layers-1):

out_vector = out_vector[:-1,:].copy()

tmp = output_errors * out_vector * (1.0 - out_vector)

tmp = np.dot(tmp, in_vector.T)

#if self.bias:

# tmp = tmp[:-1,:]

self.weights_matrices[layer_index-1] += self.learning_rate * tmp

output_errors = np.dot(self.weights_matrices[layer_index-1].T,

output_errors)

if self.bias:

output_errors = output_errors[:-1,:]

layer_index -= 1

def run(self, input_vector):

# input_vector can be tuple, list or ndarray

no_of_layers = len(self.structure)

if self.bias:

# adding bias node to the end of the inpuy_vector

input_vector = np.concatenate( (input_vector,

[self.bias]) )

in_vector = np.array(input_vector, ndmin=2).T

layer_index = 1

# The input vectors to the various layers

while layer_index < no_of_layers:

x = np.dot(self.weights_matrices[layer_index-1],

in_vector)

out_vector = activation_function(x)

# input vector for next layer

in_vector = out_vector

if self.bias:

in_vector = np.concatenate( (in_vector,

[[self.bias]]) )

layer_index += 1

return out_vector

def evaluate(self, data, labels):

corrects, wrongs = 0, 0

for i in range(len(data)):

res = self.run(data[i])

res_max = res.argmax()

if res_max == labels[i]:

corrects += 1

else:

wrongs += 1

return corrects, wrongs

ANN = NeuralNetwork(network_structure=[image_pixels, 50, 50, 10],

learning_rate=0.1,

bias=None)

for i in range(len(train_imgs)):

ANN.train(train_imgs[i], train_labels_one_hot[i])

corrects, wrongs = ANN.evaluate(train_imgs, train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = ANN.evaluate(test_imgs, test_labels)

print("accuracy: test", corrects / ( corrects + wrongs))

Networks with multiple hidden layers and Epochs

import numpy as np

from scipy.special import expit as activation_function

from scipy.stats import truncnorm

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm((low - mean) / sd,

(upp - mean) / sd,

loc=mean,

scale=sd)

class NeuralNetwork:

def __init__(self,

network_structure, # ie. [input_nodes, hidden1_nodes, ... , hidden_n_nodes, output_nodes]

learning_rate,

bias=None

):

self.structure = network_structure

self.learning_rate = learning_rate

self.bias = bias

self.create_weight_matrices()

def create_weight_matrices(self):

X = truncated_normal(mean=2, sd=1, low=-0.5, upp=0.5)

bias_node = 1 if self.bias else 0

self.weights_matrices = []

layer_index = 1

no_of_layers = len(self.structure)

while layer_index < no_of_layers:

nodes_in = self.structure[layer_index-1]

nodes_out = self.structure[layer_index]

n = (nodes_in + bias_node) * nodes_out

rad = 1 / np.sqrt(nodes_in)

X = truncated_normal(mean=2, sd=1, low=-rad, upp=rad)

wm = X.rvs(n).reshape((nodes_out, nodes_in + bias_node))

self.weights_matrices.append(wm)

layer_index += 1

def train_single(self, input_vector, target_vector):

# input_vector and target_vector can be tuple, list or ndarray

no_of_layers = len(self.structure)

input_vector = np.array(input_vector, ndmin=2).T

layer_index = 0

# The output/input vectors of the various layers:

res_vectors = [input_vector]

while layer_index < no_of_layers - 1:

in_vector = res_vectors[-1]

if self.bias:

# adding bias node to the end of the 'input'_vector

in_vector = np.concatenate( (in_vector,

[[self.bias]]) )

res_vectors[-1] = in_vector

x = np.dot(self.weights_matrices[layer_index], in_vector)

out_vector = activation_function(x)

res_vectors.append(out_vector)

layer_index += 1

layer_index = no_of_layers - 1

target_vector = np.array(target_vector, ndmin=2).T

# The input vectors to the various layers

output_errors = target_vector - out_vector

while layer_index > 0:

out_vector = res_vectors[layer_index]

in_vector = res_vectors[layer_index-1]

if self.bias and not layer_index==(no_of_layers-1):

out_vector = out_vector[:-1,:].copy()

tmp = output_errors * out_vector * (1.0 - out_vector)

tmp = np.dot(tmp, in_vector.T)

#if self.bias:

# tmp = tmp[:-1,:]

self.weights_matrices[layer_index-1] += self.learning_rate * tmp

output_errors = np.dot(self.weights_matrices[layer_index-1].T,

output_errors)

if self.bias:

output_errors = output_errors[:-1,:]

layer_index -= 1

def train(self, data_array,

labels_one_hot_array,

epochs=1,

intermediate_results=False):

intermediate_weights = []

for epoch in range(epochs):

for i in range(len(data_array)):

self.train_single(data_array[i], labels_one_hot_array[i])

if intermediate_results:

intermediate_weights.append((self.wih.copy(),

self.who.copy()))

return intermediate_weights

def run(self, input_vector):

# input_vector can be tuple, list or ndarray

no_of_layers = len(self.structure)

if self.bias:

# adding bias node to the end of the inpuy_vector

input_vector = np.concatenate( (input_vector, [self.bias]) )

in_vector = np.array(input_vector, ndmin=2).T

layer_index = 1

# The input vectors to the various layers

while layer_index < no_of_layers:

x = np.dot(self.weights_matrices[layer_index-1],

in_vector)

out_vector = activation_function(x)

# input vector for next layer

in_vector = out_vector

if self.bias:

in_vector = np.concatenate( (in_vector,

[[self.bias]]) )

layer_index += 1

return out_vector

def evaluate(self, data, labels):

corrects, wrongs = 0, 0

for i in range(len(data)):

res = self.run(data[i])

res_max = res.argmax()

if res_max == labels[i]:

corrects += 1

else:

wrongs += 1

return corrects, wrongs

epochs = 3

ANN = NeuralNetwork(network_structure=[image_pixels, 80, 80, 10],

learning_rate=0.01,

bias=None)

ANN.train(train_imgs, train_labels_one_hot, epochs=epochs)

corrects, wrongs = ANN.evaluate(train_imgs, train_labels)

print("accuracy train: ", corrects / ( corrects + wrongs))

corrects, wrongs = ANN.evaluate(test_imgs, test_labels)

print("accuracy: test", corrects / ( corrects + wrongs))

Live Python training

See our Python training courses

Footnotes

1 Wan, Li; Matthew Zeiler; Sixin Zhang; Yann LeCun; Rob Fergus (2013). Regularization of Neural Network using DropConnect. International Conference on Machine Learning(ICML).