29. Natural Language Processing: Classification

By Bernd Klein. Last modified: 19 Apr 2024.

Introduction

One might think that it might not be that difficult to get good text material for examples of text classification. After all, hardly a minute goes by in our daily lives that we are not dealing with written language. Newspapers, books, and most of all, most of the internet is probably still text-based. For our example classifiers, however, the texts must be in machine-readable form and preferably in simple text files, i.e. not formatted in Word or other formats. In addition, the texts may not be protected by copyright.

We use our example novels from the Gutenberg project.

The first task consists in training a classifier which can predict the author of a paragraph from a novel.

The second example will use novels of various languages, i.e. German, Swedish, Danish, Dutch, French, Italian and Spanish.

Live Python training

Enjoying this page? We offer live Python training courses covering the content of this site.

Author Prediction

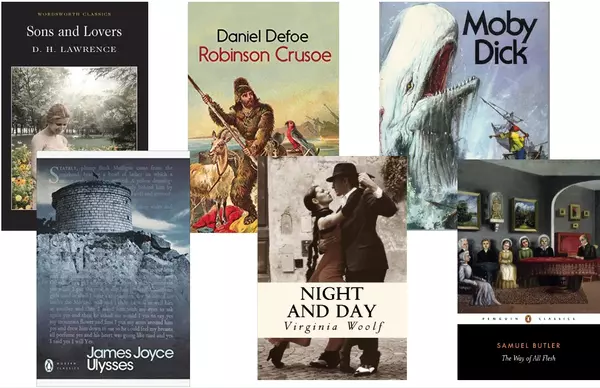

We want to demonstrate the concepts of the previous chapter of our Machine Learning tutorial in an extended example. We will use the following novels:

- Virginia Woolf: Night and Day

- Samuel Butler: The Way of all Flesh

- Herman Melville: Moby Dick

- David Herbert Lawrence: Sons and Lovers

- Daniel Defoe: The Life and Adventures of Robinson Crusoe

- James Joyce: Ulysses

Will will train a classifier with these novels. This classifier should be able to predict the author from an arbitrary text passage.

We will segment the books into lists of paragraphs. We will use a function 'text2paragraphs', which we had introduced as an exercise in our chapter on file handling.

def text2paragraphs(filename, min_size=1):

""" A text contained in the file 'filename' will be read

and chopped into paragraphs.

Paragraphs with a string length less than min_size will be ignored.

A list of paragraph strings will be returned"""

txt = open(filename).read()

paragraphs = [para for para in txt.split("\n\n") if len(para) > min_size]

return paragraphs

labels = ['Virginia Woolf', 'Samuel Butler', 'Herman Melville',

'David Herbert Lawrence', 'Daniel Defoe', 'James Joyce']

files = ['night_and_day_virginia_woolf.txt', 'the_way_of_all_flash_butler.txt',

'moby_dick_melville.txt', 'sons_and_lovers_lawrence.txt',

'robinson_crusoe_defoe.txt', 'james_joyce_ulysses.txt']

path = "books/"

data = []

targets = []

counter = 0

for fname in files:

paras = text2paragraphs(path + fname, min_size=150)

data.extend(paras)

targets += [counter] * len(paras)

counter += 1

# cell is useless, because train_test_split will do the shuffling!

import random

data_targets = list(zip(data, targets))

# create random permuation on list:

data_targets = random.sample(data_targets, len(data_targets))

data, targets = list(zip(*data_targets))

Split into train and test sets:

from sklearn.model_selection import train_test_split

res = train_test_split(data, targets,

train_size=0.8,

test_size=0.2,

random_state=42)

train_data, test_data, train_targets, test_targets = res

len(train_data), len(test_data), len(train_targets), len(test_targets)

We create a Naive Bayes classifiert:

from sklearn.feature_extraction.text import CountVectorizer, ENGLISH_STOP_WORDS

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=list(ENGLISH_STOP_WORDS))

vectors = vectorizer.fit_transform(train_data)

# creating a classifier

classifier = MultinomialNB(alpha=.01)

classifier.fit(vectors, train_targets)

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets,

predictions)

f1_score = metrics.f1_score(test_targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

OUTPUT:

accuracy score: 0.9243331518780621 F1-score: 0.9206533945061474

We will test this classifier now with a different book of Virginia Woolf.

paras = text2paragraphs(path + "the_voyage_out_virginia_woolf.txt", min_size=250)

first_para, last_para = 100, 500

vectors_test = vectorizer.transform(paras[first_para: last_para])

#vectors_test = vectorizer.transform(["To be or not to be"])

predictions = classifier.predict(vectors_test)

print(predictions)

targets = [0] * (last_para - first_para)

accuracy_score = metrics.accuracy_score(targets,

predictions)

precision_score = metrics.precision_score(targets,

predictions,

average='macro')

f1_score = metrics.f1_score(targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("precision score: ", accuracy_score)

print("F1-score: ", f1_score)

OUTPUT:

[5 0 0 5 0 1 5 0 2 5 0 0 0 0 0 0 0 0 1 0 1 0 0 5 1 5 0 1 1 0 0 0 5 5 2 5 0 2 5 5 0 0 0 0 0 3 0 0 0 0 0 4 2 5 2 2 0 0 0 0 0 5 5 0 0 2 0 0 0 0 5 5 5 0 0 0 0 0 0 2 2 0 0 2 2 5 5 3 0 5 0 0 0 0 0 5 0 0 1 0 0 3 5 5 0 5 5 5 5 0 5 0 0 0 0 0 0 1 2 0 0 0 0 0 1 2 2 2 5 5 0 0 0 1 5 0 1 5 1 3 0 0 0 0 0 0 0 0 0 1 1 0 5 0 5 1 0 1 3 0 0 0 0 0 0 5 0 1 0 0 0 5 5 5 2 0 2 3 0 0 0 0 0 0 0 5 0 0 0 2 5 0 0 0 0 0 0 0 0 0 0 0 0 5 5 0 0 0 5 5 5 2 0 5 3 5 0 0 0 0 5 0 0 5 2 0 0 0 0 0 3 0 0 0 0 2 0 0 5 3 5 1 0 1 5 5 0 0 5 0 1 1 0 0 0 0 0 1 3 1 0 0 5 5 5 5 2 0 0 0 0 5 0 2 2 0 1 0 0 0 0 0 0 3 0 4 0 2 0 5 1 5 0 0 0 1 1 0 0 5 5 0 0 0 0 0 0 0 5 3 0 0 0 5 3 0 0 0 0 0 0 3 0 0 0 0 3 0 5 5 0 0 5 0 3 5 0 3 0 0 0 1 0 1 0 0 0 5 3 0 0 0 0 1 1 0 0 0 0 0 0 0 0 0 2 2 2 3 0 3 1 1 0 0 0 5 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 5 0 0 0 0 0 0 0 0] accuracy score: 0.61 precision score: 0.61 F1-score: 0.12629399585921325

predictions = classifier.predict_proba(vectors_test)

print(predictions)

OUTPUT:

[[2.61090538e-005 4.58403141e-006 8.39745922e-008 2.21565820e-006 2.87585932e-018 9.99967007e-001] [9.96542166e-001 1.54683474e-005 8.35075096e-005 2.06920501e-013 1.95113219e-015 3.35885767e-003] [9.97755017e-001 1.43504543e-009 4.61314594e-015 1.00732673e-009 9.95493304e-018 2.24498043e-003] ... [9.99999173e-001 8.27399966e-007 9.18473090e-041 1.63002943e-023 2.21223334e-045 1.23837240e-033] [9.99999997e-001 7.58272459e-017 5.62033816e-030 5.68107537e-042 1.40184221e-053 3.46498842e-009] [1.00000000e+000 6.17861563e-054 5.79780942e-060 9.92176557e-085 2.55581464e-109 1.82151421e-050]]

You may have hoped for a better result and you may be disappointed. Yet, this result is on the other hand quite impressive. In nearly 60 % of all cases we got the label 0, which stand for Virginia Woolf and her novel "Night and Day". We can say that our classifier recognized the Woolf writing style just by the words in nearly 60 percent of all the paragraphs, even though it is a different novel.

Let us have a look at the first 10 paragraphs which we have tested:

for i in range(0, 10):

print(predictions[i], paras[i+first_para])

OUTPUT:

[2.61090538e-05 4.58403141e-06 8.39745922e-08 2.21565820e-06 2.87585932e-18 9.99967007e-01] "That's the painful thing about pets," said Mr. Dalloway; "they die. The first sorrow I can remember was for the death of a dormouse. I regret to say that I sat upon it. Still, that didn't make one any the less sorry. Here lies the duck that Samuel Johnson sat on, eh? I was big for my age." [9.96542166e-01 1.54683474e-05 8.35075096e-05 2.06920501e-13 1.95113219e-15 3.35885767e-03] "Please tell me--everything." That was what she wanted to say. He had drawn apart one little chink and showed astonishing treasures. It seemed to her incredible that a man like that should be willing to talk to her. He had sisters and pets, and once lived in the country. She stirred her tea round and round; the bubbles which swam and clustered in the cup seemed to her like the union of their minds. [9.97755017e-01 1.43504543e-09 4.61314594e-15 1.00732673e-09 9.95493304e-18 2.24498043e-03] The talk meanwhile raced past her, and when Richard suddenly stated in a jocular tone of voice, "I'm sure Miss Vinrace, now, has secret leanings towards Catholicism," she had no idea what to answer, and Helen could not help laughing at the start she gave. [2.09796530e-05 1.42917078e-07 1.04866010e-10 2.79866828e-03 6.50273161e-19 9.97180209e-01] However, breakfast was over and Mrs. Dalloway was rising. "I always think religion's like collecting beetles," she said, summing up the discussion as she went up the stairs with Helen. "One person has a passion for black beetles; another hasn't; it's no good arguing about it. What's _your_ black beetle now?" [1.00000000e+00 4.73272222e-43 5.53585689e-36 3.03806804e-34 9.36524371e-72 2.47499068e-27] It was as though a blue shadow had fallen across a pool. Their eyes became deeper, and their voices more cordial. Instead of joining them as they began to pace the deck, Rachel was indignant with the prosperous matrons, who made her feel outside their world and motherless, and turning back, she left them abruptly. She slammed the door of her room, and pulled out her music. It was all old music--Bach and Beethoven, Mozart and Purcell--the pages yellow, the engraving rough to the finger. In three minutes she was deep in a very difficult, very classical fugue in A, and over her face came a queer remote impersonal expression of complete absorption and anxious satisfaction. Now she stumbled; now she faltered and had to play the same bar twice over; but an invisible line seemed to string the notes together, from which rose a shape, a building. She was so far absorbed in this work, for it was really difficult to find how all these sounds should stand together, and drew upon the whole of her faculties, that she never heard a knock at the door. It was burst impulsively open, and Mrs. Dalloway stood in the room leaving the door open, so that a strip of the white deck and of the blue sea appeared through the opening. The shape of the Bach fugue crashed to the ground. [1.47496372e-02 9.85036954e-01 8.17006727e-09 4.84854634e-06 1.68190382e-22 2.08551677e-04] "He wrote awfully well, didn't he?" said Clarissa; "--if one likes that kind of thing--finished his sentences and all that. _Wuthering_ _Heights_! Ah--that's more in my line. I really couldn't exist without the Brontes! Don't you love them? Still, on the whole, I'd rather live without them than without Jane Austen." [1.89419021e-02 2.66105511e-13 4.02300609e-02 9.51346874e-08 1.95716107e-23 9.40827942e-01] How divine!--and yet what nonsense!" She looked lightly round the room. "I always think it's _living_, not dying, that counts. I really respect some snuffy old stockbroker who's gone on adding up column after column all his days, and trotting back to his villa at Brixton with some old pug dog he worships, and a dreary little wife sitting at the end of the table, and going off to Margate for a fortnight--I assure you I know heaps like that--well, they seem to me _really_ nobler than poets whom every one worships, just because they're geniuses and die young. But I don't expect _you_ to agree with me!" [9.99236016e-01 3.53388512e-04 2.06139744e-14 2.85109976e-08 5.73336083e-13 4.10567227e-04] "When you're my age you'll see that the world is _crammed_ with delightful things. I think young people make such a mistake about that--not letting themselves be happy. I sometimes think that happiness is the only thing that counts. I don't know you well enough to say, but I should guess you might be a little inclined to--when one's young and attractive--I'm going to say it!--_every_thing's at one's feet." She glanced round as much as to say, "not only a few stuffy books and Bach." [1.89310339e-11 1.15667125e-20 1.00000000e+00 2.09826905e-12 1.52563273e-10 8.05977400e-15] The shores of Portugal were beginning to lose their substance; but the land was still the land, though at a great distance. They could distinguish the little towns that were sprinkled in the folds of the hills, and the smoke rising faintly. The towns appeared to be very small in comparison with the great purple mountains behind them. [6.03644731e-02 4.73461977e-08 8.36869820e-02 3.45875011e-06 8.14459953e-14 8.55945039e-01] Rachel followed her eyes and found that they rested for a second, on the robust figure of Richard Dalloway, who was engaged in striking a match on the sole of his boot; while Willoughby expounded something, which seemed to be of great interest to them both.

The paragraph with the index 100 was predicted as being "Ulysses by James Joyce". This paragraph contains the name "Samuel Johnson". "Ulysses" contains many occurences of "Samuel" and "Johnson", whereas "Night and Day" doesn't contain neither "Samuel" and "Johnson". So, this might be one of the reasons for the prediction.

We had trained a Naive Bayes classifier by using MultinomialNB. We want to train now a Neural Network. We will use MLPClassifier in the following. Be warned: It will take a long time, unless you have an extremely fast computer. On my computer it takes about five minutes!

from sklearn.feature_extraction.text import CountVectorizer, ENGLISH_STOP_WORDS

from sklearn.neural_network import MLPClassifier

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=list(ENGLISH_STOP_WORDS))

vectors = vectorizer.fit_transform(train_data)

print("Creating a classifier. This will take some time!")

classifier = MLPClassifier(random_state=1, max_iter=300).fit(vectors, train_targets)

OUTPUT:

Creating a classifier. This will take some time!

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets,

predictions)

f1_score = metrics.f1_score(test_targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

OUTPUT:

accuracy score: 0.9150789330430049 F1-score: 0.9187366404817379

Language Prediction

We will train now a classifier which will be capable of recognizing the language of a text for the languages:

German, Danish, English, Spanish, French, Italian, Dutch and Swedish

We will use two books of each language for training and testing purposes. The authors and book titles should be recognizable in the following file names:

import os

os.listdir("books/various_languages")

OUTPUT:

['de_goethe_leiden_des_jungen_werther1.txt', 'es_antonio_de_alarcon_novelas_cortas.txt', 'en_virginia_woolf_night_and_day.txt', 'fr_emile_zola_la_bete_humaine.txt', 'license', 'it_amato_gennaro_una_sfida_al_polo.txt', 'en_herman_melville_moby_dick.txt', 'se_august_strindberg_röda_rummet.txt', 'se_selma_lagerlöf_bannlyst.txt', 'nl_cornelis_johannes_kieviet_Dik_Trom_en_sijn_dorpgenooten.txt', 'nl_lodewijk_van_deyssel.txt', 'fr_emile_zola_germinal.txt', 'es_mguel_de_cervantes_don_cuijote.txt', 'de_goethe_leiden_des_jungen_werther2.txt', 'original', 'it_alessandro_manzoni_i_promessi_sposi.txt', 'dk_andreas_lauritz_clemmensen_beskrivelser_og_tegninger.txt', 'de_nietzsche_also_sprach_zarathustra.txt']

path = "books/various_languages/"

files = os.listdir("books/various_languages")

labels = {fname[:2] for fname in files if fname.endswith(".txt")}

labels = sorted(list(labels))

labels

OUTPUT:

['de', 'dk', 'en', 'es', 'fr', 'it', 'nl', 'se']

print(files)

OUTPUT:

['de_goethe_leiden_des_jungen_werther1.txt', 'es_antonio_de_alarcon_novelas_cortas.txt', 'en_virginia_woolf_night_and_day.txt', 'fr_emile_zola_la_bete_humaine.txt', 'license', 'it_amato_gennaro_una_sfida_al_polo.txt', 'en_herman_melville_moby_dick.txt', 'se_august_strindberg_röda_rummet.txt', 'se_selma_lagerlöf_bannlyst.txt', 'nl_cornelis_johannes_kieviet_Dik_Trom_en_sijn_dorpgenooten.txt', 'nl_lodewijk_van_deyssel.txt', 'fr_emile_zola_germinal.txt', 'es_mguel_de_cervantes_don_cuijote.txt', 'de_goethe_leiden_des_jungen_werther2.txt', 'original', 'it_alessandro_manzoni_i_promessi_sposi.txt', 'dk_andreas_lauritz_clemmensen_beskrivelser_og_tegninger.txt', 'de_nietzsche_also_sprach_zarathustra.txt']

data = []

targets = []

for fname in files:

if fname.endswith(".txt"):

paras = text2paragraphs(path + fname, min_size=150)

data.extend(paras)

country = fname[:2]

index = labels.index(country)

targets += [index] * len(paras)

from sklearn.model_selection import train_test_split

res = train_test_split(data, targets,

train_size=0.8,

test_size=0.2,

random_state=42)

train_data, test_data, train_targets, test_targets = res

from sklearn.feature_extraction.text import CountVectorizer, ENGLISH_STOP_WORDS

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

vectorizer = CountVectorizer(stop_words=list(ENGLISH_STOP_WORDS))

#vectorizer = CountVectorizer()

vectors = vectorizer.fit_transform(train_data)

# creating a classifier

classifier = MultinomialNB(alpha=.01)

classifier.fit(vectors, train_targets)

vectors_test = vectorizer.transform(test_data)

predictions = classifier.predict(vectors_test)

accuracy_score = metrics.accuracy_score(test_targets,

predictions)

f1_score = metrics.f1_score(test_targets,

predictions,

average='macro')

print("accuracy score: ", accuracy_score)

print("F1-score: ", f1_score)

OUTPUT:

accuracy score: 0.9952193475815523 F1-score: 0.9969874823729692

Let us check this classifiert with some abitrary text in different languages:

some_texts = ["Es ist nicht von Bedeutung, wie langsam du gehst, solange du nicht stehenbleibst.",

"Man muss das Unmögliche versuchen, um das Mögliche zu erreichen.",

"It's so much darker when a light goes out than it would have been if it had never shone.",

"Rien n'est jamais fini, il suffit d'un peu de bonheur pour que tout recommence.",

"Girano le stelle nella notte ed io ti penso forte forte e forte ti vorrei"]

sources = ["Konfuzius", "Hermann Hesse", "John Steinbeck", "Emile Zola", "Gianna Nannini" ]

vtest = vectorizer.transform(some_texts)

predictions = classifier.predict(vtest)

for label in predictions:

print(label, labels[label])

OUTPUT:

0 de 0 de 2 en 4 fr 5 it

Live Python training

Enjoying this page? We offer live Python training courses covering the content of this site.

Upcoming online Courses